Introduction: Diet – The Cornerstone of Health Management, A New Perspective with AI

“Food is the first necessity of the people.” Diet is fundamental to sustaining life and health. For patients with chronic diseases, appropriate dietary control is an indispensable part of disease management. However, accurately recording and analyzing daily dietary intake, and providing personalized nutritional advice based on this, is a tedious and challenging task for many. Traditional dietary recording methods (like handwritten diaries or manual app input) are often time-consuming, laborious, and prone to omissions or inaccurate estimations.

To address this pain point, BIT Research Alliance is dedicated to developing advanced AI-driven food recognition systems using Multimodal Large Language Models (Multimodal LLMs). Our goal is to make dietary logging simpler and smarter, and to provide scientific, precise personalized nutritional guidance for everyone, especially for home-care patients requiring strict dietary management.

The Technological Core of AI Food Recognition: The Magic of Multimodal Large Language Models

Traditional image recognition models can perform well in specific scenarios, but the complexity of food recognition lies in its vast variety, diverse forms, varied cooking methods, and often mixed presentation. Multimodal Large Language Models offer new approaches to tackle these challenges:

- Multimodal Input Understanding:

- Image-centric: The core of the system is its ability to “understand” food images. Users simply take a photo of their meal, and AI can extract rich visual features from the image.

- Text-assisted (Optional): If users can provide additional textual descriptions (e.g., “steamed cod with less oil,” “oatmeal with nuts”), it can further improve the accuracy and detail of recognition.

- Voice Input (Optional): In the future, voice input could even be integrated, allowing users to directly describe their meals verbally.

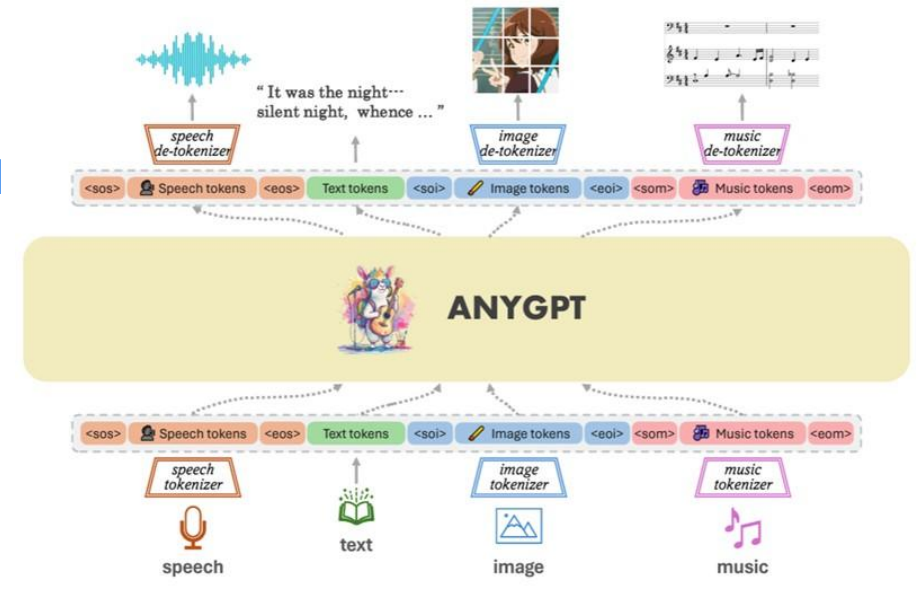

- ANYGPT Core Architecture :

- Image tokenizer/encoder: Converts the input food image into a digitized representation (Image tokens) that the LLM can understand.

- Text tokenizer/encoder: Processes any supplementary textual descriptions.

- LLM Core Processing: The Large Language Model receives encoded information from different modalities. It utilizes its powerful semantic understanding and reasoning capabilities to comprehensively analyze image features and textual cues to identify food types and ingredients.

- Output Generation: The model ultimately outputs recognition results, such as a list of food names, estimated portion sizes, and primary nutritional components.

- Targeted Model Training and Optimization:

- Massive Data Training: We have trained our model with a large volume of food image data (“Training for 5000 records of Food Recognition and Nutritional Analysis”), covering various common dishes, ingredients, and cooking styles.

- Nutrition Database Integration: Recognition results are linked with professional nutritional composition databases to estimate detailed nutritional information such as calories, protein, fat, carbohydrates, vitamins, and minerals.

- Llama-3-8B Base Model: We chose a more resource-efficient base model like Llama-3-8B for development. Compared to very large models like GPT-4 and Claude, it offers advantages in reducing token usage (Reduces token usage by 15%), making it more suitable for deployment in resource-constrained home care or mobile application scenarios.

Highlights and Advantages of BIT Research Alliance’s AI Food Recognition System

- Designed for Home Care Patients: Specifically focuses on the practical needs of patients in a home environment, striving for simple operation and easy-to-understand results.

- Automated Recording and Recommendations: Enables automated logging of dietary content and can provide preliminary dietary advice based on recognition results and preset health goals (e.g., carbohydrate intake limits for diabetic patients, protein and potassium control for kidney disease patients).

- Efficient Base Model Selection: The application of Llama-3-8B ensures that the system maintains good performance while having higher resource utilization efficiency and lower operating costs.

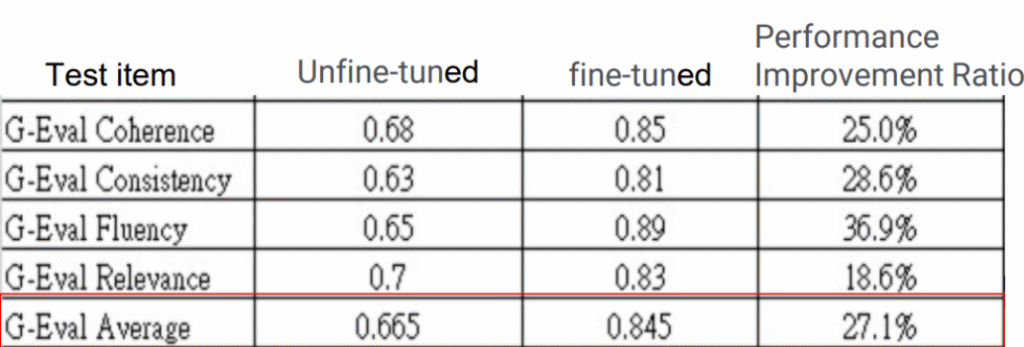

- Significant Performance Improvement: Through targeted optimization and fine-tuning, our food recognition model has achieved significant performance improvements across multiple evaluation metrics. After fine-tuning, the G-Eval average score increased from 0.665 to 0.845, a performance improvement ratio of 27.1%. This indicates substantial progress in the model’s coherence, consistency, fluency, and relevance of recognition.

The Path to Personalized Nutrition

AI food recognition is merely the first step towards achieving personalized nutrition. BIT Research Alliance’s long-term goal is to integrate it into a more complete health management ecosystem:

- Convenient Dietary Logging: Users can easily complete dietary records by taking photos.

- Accurate Nutritional Analysis: AI automatically recognizes food and analyzes its nutritional components.

- Personalized Health Profiles: Combines personalized information such as the user’s health status, allergy history, dietary preferences, and exercise levels.

- Dynamic Nutritional Advice: AI dynamically adjusts nutritional recommendations based on daily dietary records and personal health profiles to ensure balanced and appropriate nutrient intake.

- Collaboration with Healthcare Professionals: Dietary reports generated by the system can be shared with doctors or nutritionists as a reference for their professional guidance, enabling collaborative patient-provider management.

Challenges and Future Outlook

AI food recognition technology still faces some challenges, such as:

- Accurate Recognition of Complex Meals: Difficulty in recognizing dishes with complex ingredients, multiple layers, or non-standardized preparations.

- Precision of Portion Size Estimation: Accurately estimating food portion sizes has always been a challenge for image recognition.

- Regional Dietary Differences: Significant variations in dietary habits across different regions and cultures require models with broader adaptability.

- Continuous Data Updates: Food types and nutritional information are constantly changing, requiring continuous updates to models and databases.

BIT Research Alliance will continue to invest in R&D to improve the recognition accuracy and robustness of our models, expand the coverage of our food database, and explore more advanced portion size estimation techniques. We are also actively exploring the integration of food recognition with other physiological sensor data (such as blood glucose monitoring) to provide more real-time, closed-loop personalized nutritional interventions.

Conclusion: AI Makes “Eating Right” Simpler and More Scientific

AI-driven food recognition technology opens up entirely new possibilities for personalized nutrition management. By applying advanced Multimodal Large Language Models to everyday dietary scenarios, BIT Research Alliance is striving to enable everyone to manage their dietary health more easily and scientifically. We believe this technology will not only help chronic disease patients better control their conditions but also allow more health-conscious individuals to achieve more precise nutritional goals, thereby improving overall quality of life.