【Introduction】

As one of the most groundbreaking technologies in artificial intelligence today, Large Language Models (LLMs) are profoundly transforming various industries. In the medical field, their potential is immeasurable. H.I.T. (Health, Innovation, Technology)’s “Large Language Model Foundation Model Group” focuses on developing, optimizing, and applying state-of-the-art foundational language models. These models provide a solid, efficient, and reliable core engine for upper-layer medical AI applications (such as medical agents, knowledge graph augmentation, multimodal analysis, etc.). We are committed to creating next-generation Foundation Models that not only understand natural language but also possess deep insights into medical expertise and can adapt to complex medical scenarios.

I. Building a Powerful and Efficient Core for Medical Language Intelligence

H.I.T. has made significant strides in the R&D and application of Large Language Model Foundation Models:

- Selection and Deep Optimization of Advanced Foundation Models :

- We closely follow global LLM development trends, actively evaluating and adopting industry-leading open-source or commercial foundation models (such as the Llama series, Breeze-7B-instruct-v1 mentioned in the presentation).

- More importantly, we conduct deep optimization and fine-tuning of these base models to meet the specific needs of the medical domain. This includes training with vast amounts of professional medical text (e.g., 7 billion tokens of chronic disease health education documents), clinical data (e.g., 2,200 QA data samples), and multimodal information, making them more medically knowledgeable and clinically relevant.

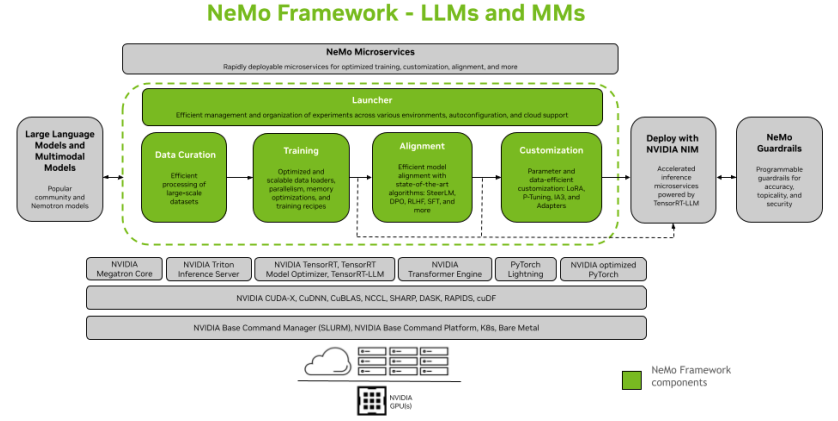

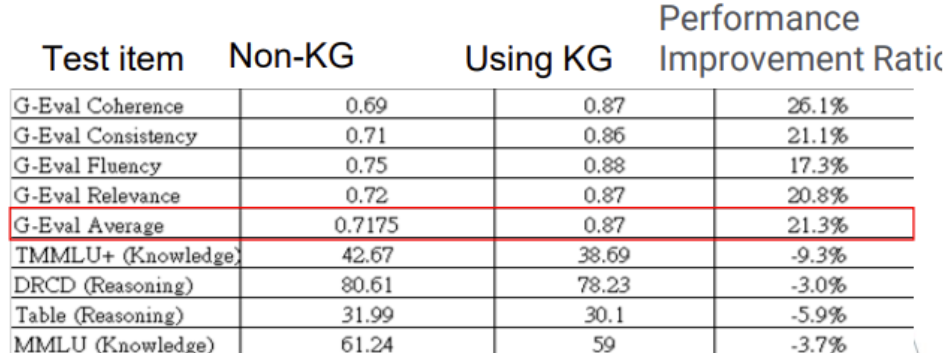

- Visual Aid Suggestion: NVIDIA NeMo Framework showcases our powerful platform for training and customizing LLMs. The table shows that Breeze-7B-instruct-v1, as a base model, significantly improves in performance when combined with a knowledge graph. The performance improvement of Llama-3-8B in a food recognition task after fine-tuning.

- Underlying Support for Multimodal Capabilities :

- Our Foundation Models are not limited to text processing; they support the fusion and generation of multimodal information from their core architecture. This means the models can understand and correlate information from text, images (medical scans), speech (doctor-patient conversations), and even video, providing a foundation for more complex medical AI applications.

- Visual Aid Suggestion: The “Any to Any Models” concept diagram clearly illustrates the LLM as the core of multimodal transformation. Multimodal Mixture of Experts (MoE) architecture, the application of the AnyGPT model for food image recognition on P.11, and the NeVA framework processing X-ray images on P.26 all reflect the multimodal potential of Foundation Models.

- Exploration and Application of Mixture of Experts (MoE) Technology:

- To enhance model performance while maintaining computational efficiency, we actively explore and apply Mixture of Experts (MoE) technology. MoE allows the model to dynamically invoke the most relevant “expert sub-networks” when processing different tasks or data, thereby achieving higher accuracy with fewer active parameters.

- Visual Aid Suggestion: Details the principles and advantages of MoE technology and lists advanced models like Mixtral 8x7B and DBRX MoE. Then shows how MoE can be applied in multimodal scenarios.

- Improving Model Efficiency and Resource Utilization :

- We pay close attention to model computational efficiency and resource consumption. Through model architecture optimization (such as Llama-3-8B’s optimized attention mechanism, reducing token usage by 15%), quantization, knowledge distillation, and other techniques, we strive to maintain high performance while reducing model deployment costs and inference latency. This allows for broader application in practical medical settings, including edge devices.

- Visual Aid Suggestion: Compares the total and active parameters of different MoE models, highlighting efficiency advantages. Mentions that Llama-3-8B is more resource-efficient compared to GPT-4 and Claude.

- Providing Customized Model Services for Specific Medical Tasks:

- Based on our robust Foundation Models, we can quickly provide customized model fine-tuning and deployment services for specific medical application scenarios (such as auxiliary diagnosis for particular diseases, report generation for specific departments, medical literature summarization, etc.), meeting the personalized needs of different partners.

II. More Intelligent, Safer, and More Universal Foundation Models

H.I.T.’s Large Language Model Foundation Model Group will continue to lead at the technological forefront:

- Continuous Evolution of Foundation Model Architectures:

- We will constantly explore and experiment with more advanced model architectures (such as models with enhanced long-context processing capabilities, more efficient attention mechanisms, and stronger logical reasoning architectures) to meet the demands of increasingly complex medical tasks.

- (Reference to latest tech trends): Various Transformer variants and State Space Models (SSMs) like Mamba are attempting to overcome the bottlenecks of current LLMs.

- Enhancing Trustworthy AI Characteristics of Models:

- We will place the model’s explainability, robustness, fairness, and privacy protection at the core of our R&D. This includes exploring built-in explainability mechanisms, reducing model bias, and developing stronger privacy-preserving technologies (such as the application of differential privacy and homomorphic encryption in model training and inference) to ensure the models are safe and reliable in the sensitive medical domain.

- (Reference to latest tech trends): AI ethics and Trustworthy AI are current research hotspots, with related technologies and standards continuously improving.

- Medical-Specific Pre-training and Knowledge Injection:

- In addition to pre-training on general domain data, we will invest more resources in pre-training on large-scale medical-specific data. This will equip our Foundation Models with a deep medical background from the outset.

- We will research more effective knowledge injection methods to more tightly integrate structured medical knowledge (such as medical ontologies and knowledge graphs) into the parameters of Foundation Models, rather than just relying on external calls via RAG.

- Extreme Optimization of Lightweighting and Edge Deployment Capabilities:

- We will continue to push research in model miniaturization and lightweighting, developing Foundation Models that can run efficiently on resource-constrained edge devices (such as wearable medical devices and mobile terminals), making AI ubiquitous.

- (Reference to latest tech trends): Techniques like model quantization, pruning, and knowledge distillation are primary methods for achieving model lightweighting.

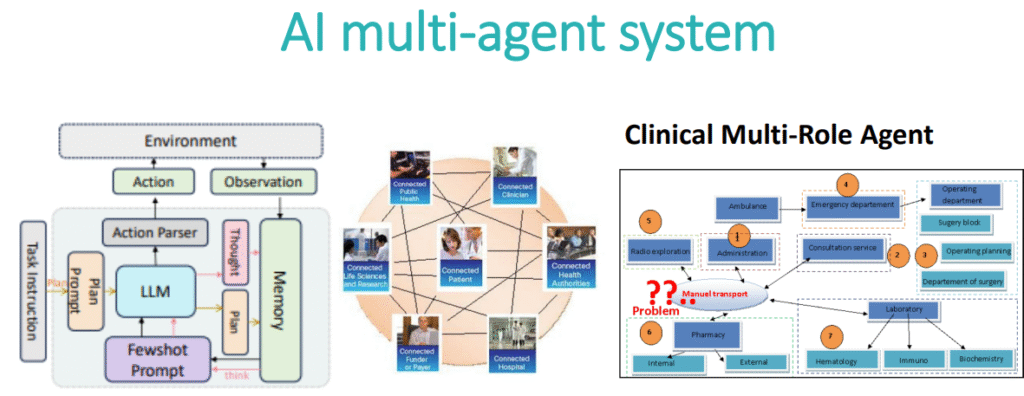

- Supporting More Complex AI Agents and Multi-Agent Collaboration:

- We will enable Foundation Models with stronger capabilities for tool invocation, task planning, and collaborative communication, serving as the core “brain” for more complex AI Agent systems (like the AI multi-agent system mentioned on Slide P.36). This will support multiple AI agents in collaboratively completing medical tasks.

III. The Underlying Powerhouse for the Entire Medical AI Ecosystem

Powerful Foundation Models are the cornerstone of the entire intelligent healthcare ecosystem, holding immense commercial potential:

- Medical AI Model-as-a-Service (MaaS):

- Offer high-performance, medically-optimized Foundation Model API services to medical technology companies, hospitals, research institutions, etc. This will enable them to quickly build and deploy their own upper-layer AI applications, lowering the barrier to AI development.

- Customized Medical Language Model Solutions:

- Provide end-to-end Foundation Model customization services for clients with special requirements (such as large hospital chains, pharmaceutical companies, medical device manufacturers), including data processing, model training, fine-tuning, deployment, and ongoing maintenance.

- Empowering Medical AI Application Development Platforms:

- Build a medical AI application development platform based on our Foundation Models, offering rich development tools, pre-built components, and industry templates to help developers rapidly create various medical AI applications.

- Medical Big Data Analytics and Insight Services:

- Utilize the powerful natural language understanding and information extraction capabilities of Foundation Models to provide in-depth analysis and insight services for vast amounts of medical text data (such as medical records, literature, health discussions on social media) to medical institutions, government health departments, and research institutions. This will support clinical research, public health decision-making, and industry trend forecasting.

- Cross-Lingual Medical Information Services:

- Leveraging the multilingual processing capabilities of Foundation Models, provide high-quality cross-lingual medical information translation, summarization, and retrieval services, promoting the exchange and sharing of global medical knowledge.

【Conclusion】

Foundation Models are the key to unlocking the door to intelligent healthcare. H.I.T.’s “Large Language Model Foundation Model Group” is dedicated to forging this sharpest and most reliable key. We believe that through continuous technological breakthroughs and a deep understanding of medical scenarios, our Foundation Models will provide an unceasing driving force for the vigorous development of medical AI, ultimately promoting the intelligent transformation of healthcare services for the benefit of all globally.