Introduction: A Powerful Engine Empowering Cutting-Edge AI Research

As Generative Artificial Intelligence (Generative AI) drives transformation across various fields at an unprecedented pace, having efficient, scalable, and flexible development tools is crucial for research institutions. The training, fine-tuning, and deployment of advanced AI technologies like Large Language Models (LLMs) and Multimodal Models (MMs) require robust underlying frameworks to cope with ever-increasing model sizes and data complexity.

BIT Research Alliance consistently stands at the forefront of AI technology application. To rapidly translate our innovative ideas in fields like healthcare into tangible results, we have chosen and deeply integrated the NVIDIA NeMo™ framework. This end-to-end, cloud-native framework provides us with a comprehensive suite of tools and capabilities needed to develop, customize, and deploy large-scale Generative AI models.

Overview of the NVIDIA NeMo™ Framework

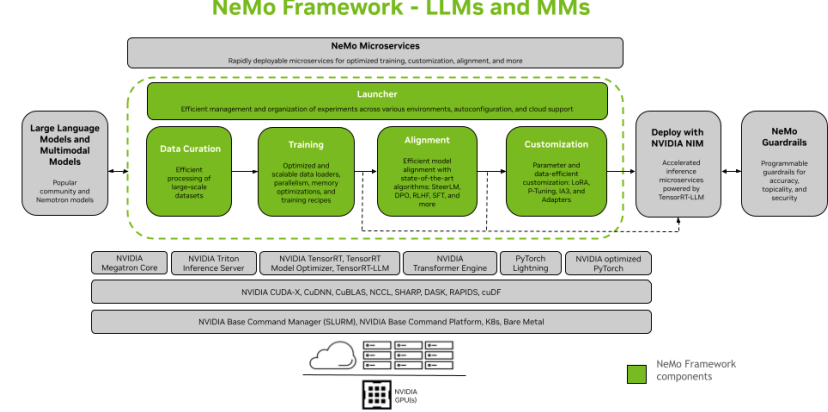

NVIDIA NeMo™ is an enterprise-grade framework designed specifically for large-scale Generative AI. It offers a range of pre-trained models, training scripts, and tools for data processing, model customization, and large-scale inference. Its core features include:

- Scalability and Cloud-Native Architecture:

- NeMo is designed for large-scale training in multi-GPU and multi-node environments, fully leveraging NVIDIA’s GPU acceleration capabilities.

- Its cloud-native architecture makes it easy to deploy and manage on various cloud platforms or on-premises clusters.

- Comprehensive Model Support:

- Supports various advanced Generative AI model architectures, including Large Language Models (LLMs) and Multimodal Models (MMs).

- Provides foundation models trained on trillions of tokens, serving as a starting point for further customization.

- End-to-End Development Workflow Support:

- Data Curation: Offers tools for efficiently processing large-scale datasets.

- Training: Includes optimized data loaders, parallelization strategies, memory optimization techniques, and efficient training recipes.

- Alignment: Supports various state-of-the-art model alignment techniques such as SteerLM, DPO, RLHF, SFT, etc., ensuring model outputs align with human expectations and specific task requirements.

- Customization: Provides parameter-efficient and data-efficient customization methods like LoRA, P-Tuning, IA3, etc., enabling researchers to quickly fine-tune pre-trained models on their own datasets.

- Large-Scale Deployment (Deploy with NVIDIA NIM): Integrates with NVIDIA NIM (NVIDIA Inference Microservices) to transform trained models into optimized inference microservices for efficient, low-latency deployment.

- NeMo Guardrails: Offers programmable guardrails for controlling model accuracy, topicality, and safety, ensuring reliable and responsible AI applications.

How BIT Research Alliance Leverages the NeMo™ Framework to Accelerate Innovation

The NVIDIA NeMo™ framework plays an indispensable role in various research projects at BIT Research Alliance:

- Rapid Prototyping and Model Iteration:

- NeMo’s pre-trained models and standardized training workflows enable us to quickly build and test new AI model concepts, shortening the cycle from idea to initial validation.

- For example, when developing language models for specific medical texts, we can leverage NeMo’s LLM foundation models and efficiently fine-tune them with our collected medical corpora.

- Handling Large-Scale, Multimodal Medical Data:

- Data in the medical field is often massive and multimodal. The NeMo framework’s data processing and multimodal model support capabilities help us effectively manage and utilize this complex data.

- In our projects like the multimodal chronic disease management framework or AI food recognition, the NeMo framework has supported the joint processing and model training for various data types, including images and text.

- Achieving Efficient Model Customization and Alignment:

- For specific medical application scenarios (such as diagnostic assistance for particular diseases or health guidance for specific populations), we need to customize general models. Parameter-efficient fine-tuning techniques like LoRA, provided by NeMo, allow us to quickly adapt models with limited data and computational resources.

- Simultaneously, through alignment techniques like RLHF (Reinforcement Learning from Human Feedback), we can guide models to generate content that better conforms to medical professional standards and ethical requirements.

- Optimizing Deployment and Inference Efficiency:

- Efficient deployment and inference are crucial for translating research outcomes into practical medical AI tools. The NeMo framework, combined with TensorRT-LLM™ and NVIDIA Triton™ Inference Server, optimizes trained models and deploys them as high-performance inference services, meeting the real-time demands of clinical applications.

- This is particularly important in our development of Line chatbots requiring real-time responses or edge computing AI systems.

- Ensuring Reliability and Safety of AI Applications:

- The reliability and safety of medical AI are our top priorities. NeMo Guardrails allow us to set clear behavioral boundaries for AI models, preventing them from generating inaccurate, irrelevant, or harmful content, thereby enhancing the trustworthiness of our AI solutions.

The Value the NeMo™ Framework Brings to BIT Research Alliance

Integrating the NVIDIA NeMo™ framework has brought multifaceted value to BIT Research Alliance:

- Increased R&D Efficiency: Significantly shortens the development, training, and optimization time for AI models.

- Enhanced Model Performance: Enables the training of larger, more complex, and higher-performing Generative AI models.

- Lowered Technical Barriers: Standardized tools and workflows make it easier for our researchers to master advanced AI technologies.

- Accelerated Translation of Results: More quickly transforms cutting-edge AI research outcomes into solutions that can address real-world medical problems.

Conclusion: NVIDIA NeMo™ — The Solid Backbone for BIT Research Alliance’s Generative AI Innovation

With its comprehensive features, powerful performance, and enterprise-grade reliability, the NVIDIA NeMo™ framework has become an indispensable technological cornerstone for BIT Research Alliance’s innovative research and application development in the field of Generative AI. By leveraging this powerful engine, we will be able to explore the infinite possibilities of AI in healthcare more rapidly and effectively, continuously delivering innovative outcomes that genuinely benefit patients and society. We look forward to continuing to lead the wave of medical AI development with the support of the NeMo™ framework.