Introduction: Beyond Flatlands – Envisioning a 3D Medical Future

Traditional medical imaging, such as X-rays and CT scans, while providing crucial evidence for disease diagnosis, has its limitations in understanding complex anatomical structures and planning intricate surgeries due to its two-dimensional presentation. With the rapid development of Artificial Intelligence (AI) technology, particularly breakthroughs in image generation and 3D reconstruction, we are entering a new era capable of “seeing” deeper and more立体 (three-dimensionally) into healthcare.

BIT Research Alliance is committed to applying cutting-edge AI technology to the automatic generation of 3D medical images and the construction of precise surgical models. Our goal is to provide clinicians with more intuitive and accurate visualization tools, thereby enhancing diagnostic efficiency, optimizing surgical planning, improving patient outcomes, and revolutionizing medical education.

AI-Driven Medical Image Reporting and 3D Image Generation

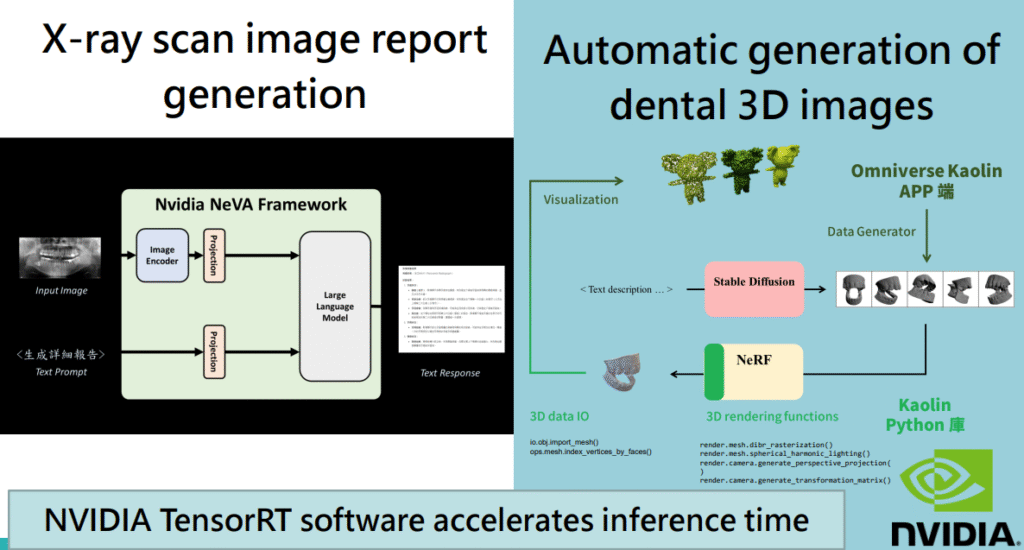

- From 2D Images to Structured Reports (Nvidia NeVA Framework Application ):

- Challenge: Interpreting medical images and writing accurate, comprehensive reports is a time-consuming task requiring high expertise.

- AI Solution: We utilize multimodal frameworks like Nvidia NeVA, which can directly generate structured text reports from input 2D medical images (e.g., panoramic X-rays) via an Image Encoder and a Large Language Model.

- Benefits: This not only significantly improves report writing efficiency and reduces human error but also provides important semantic information for subsequent 3D reconstruction. For example, AI can automatically identify root canal treatments, periodontal conditions, and bone structure abnormalities.

- Accelerated Inference: The inference process is accelerated by NVIDIA TensorRT™ software, ensuring real-time report generation.

- Automatic Generation of Dental 3D Images:

- Need: In dentistry, 3D imaging is crucial for planning implants, orthodontics, and maxillofacial surgeries.

- AI Workflow:

- Data Generator: Tools like the Omniverse Kaolin APP can generate diverse dental data from existing datasets or through algorithms.

- Text/Image to 3D: Combining advanced generative techniques like Stable Diffusion and Neural Radiance Fields (NeRF), AI can automatically generate highly realistic 3D dental models based on text descriptions (e.g., “generate a molar model with a specific cavity”) or 2D images.

- 3D Data I/O and Rendering: The system supports standard 3D data formats (like .obj) and possesses powerful 3D rendering capabilities to clearly display model details.

- Python Library Support: Tools like the Kaolin Python library simplify 3D data processing and model manipulation.

- X-ray Scan Image Report Generation:

- We have developed an intuitive demonstration system (LLaVA Chatbot) where users can upload X-ray images (such as bone marrow MRIs, chest X-rays), and AI can generate detailed image analysis reports. This showcases AI’s immense potential in image interpretation and report automation.

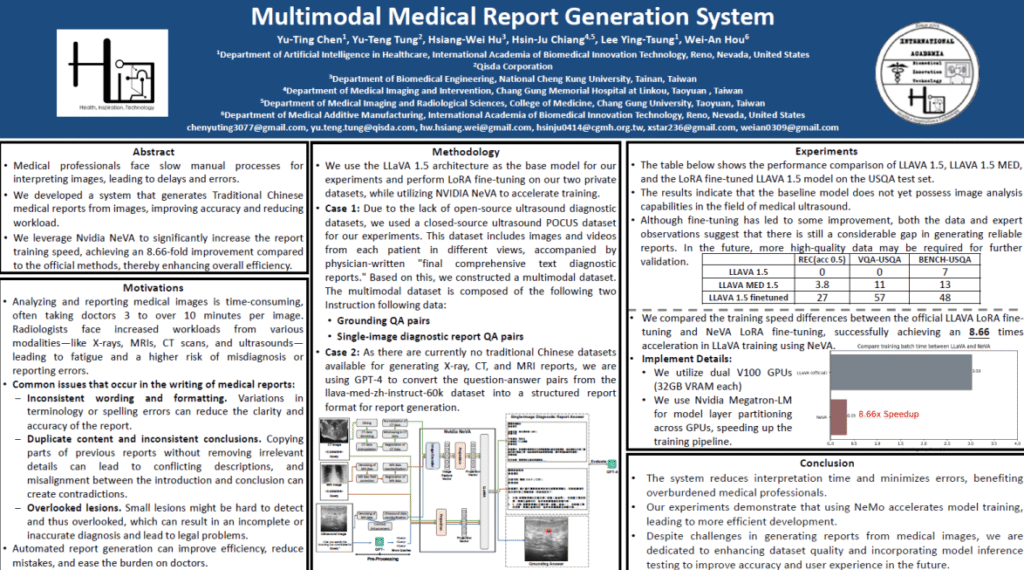

- Multimodal Medical Report Generation System (MMRGS):

- Further, we have built a Multimodal Medical Report Generation System based on LLaVA 1.5, fine-tuned with LoRA on our private datasets. This system can process images from different modalities like X-ray, CT, and MRI, and is trained with physician-written “final comprehensive text diagnostic reports” to generate reports more aligned with clinical needs.

- Accelerated Training: NVIDIA NeVA is again used to accelerate the training process, achieving up to an 8.66x speedup compared to official methods. NVIDIA Megatron-LM™ is utilized for model layer partitioning across GPUs, effectively leveraging multi-GPU resources.

AI-Empowered Automated 3D Surgical Modeling

Precise 3D surgical models are crucial for preoperative planning, surgical navigation, and the design of personalized implants.

- From Text/Image to 3D Models (Text-to-3D / Image-to-3D):

- DreamGaussian & Shap-E & Point-E: We explore various advanced AI techniques for directly generating 3D models from text descriptions or 2D images.

- For example, by inputting a text prompt like “a delicious hamburger,” DreamGaussian can generate a corresponding 3D hamburger model.

- Shap-E can generate a 3D shark from “a red shark.”

- Point-E can generate a point-cloud representation of a 3D coffee cup from “a cup of coffee.”

- Similarly, these technologies can generate corresponding 3D models from single or multiple 2D images (e.g., cartoon character pictures).

- Medical Application Potential: These technologies offer the possibility of rapidly generating preliminary anatomical structure models, lesion models, or implant concept models.

- DreamGaussian & Shap-E & Point-E: We explore various advanced AI techniques for directly generating 3D models from text descriptions or 2D images.

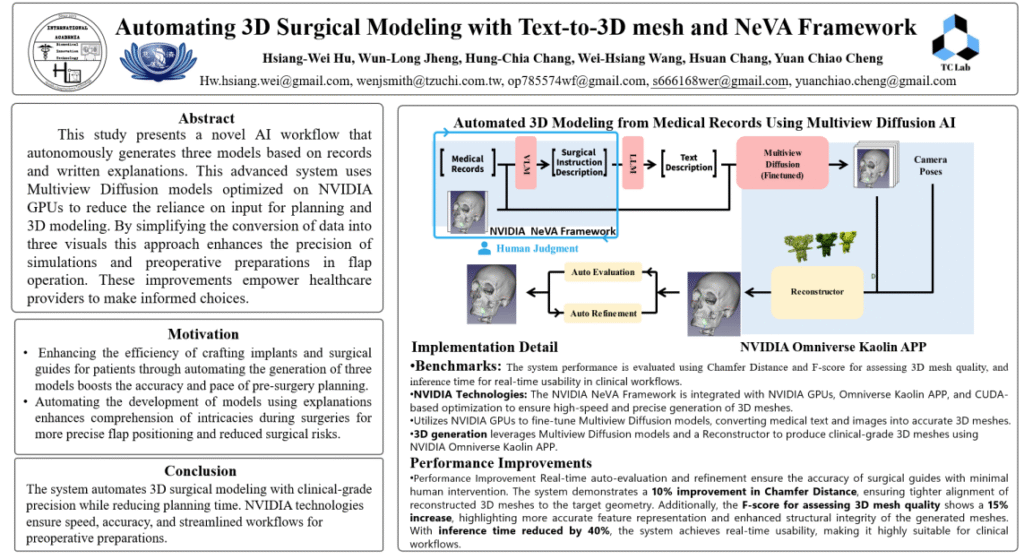

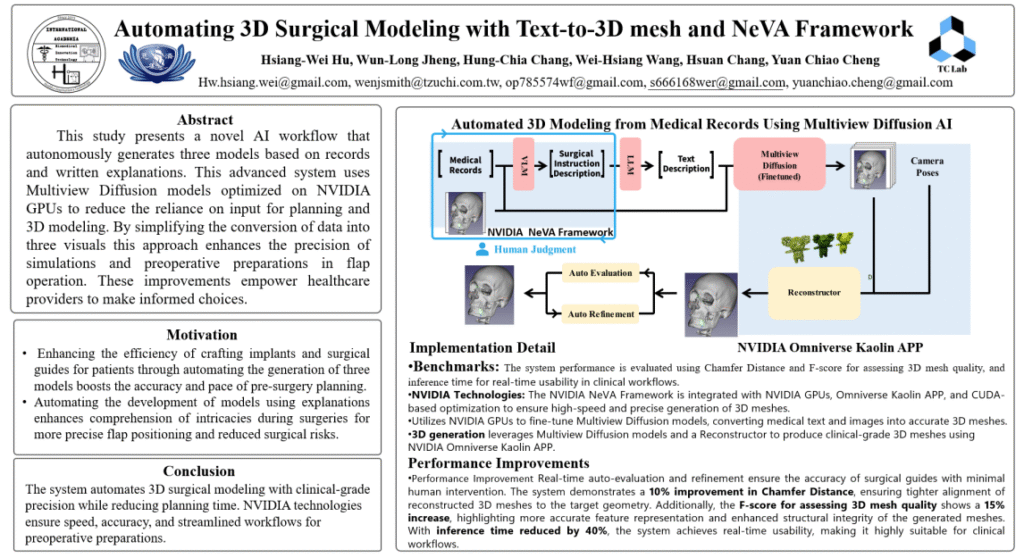

- Automated 3D Surgical Modeling with Multiview Diffusion AI :

- Clinical Need: Traditional 3D surgical modeling relies on manual operations, which are time-consuming and vary in precision.

- AI Workflow:

- Input: Medical Records and Surgical Descriptions/Explanations.

- NVIDIA NeVA Framework: Again plays a central role in processing text and potential image inputs.

- Multiview Diffusion Model (Finetuned): Utilizes a multiview diffusion model optimized on NVIDIA GPUs to convert text and image information into 2D images from multiple viewpoints.

- 3D Reconstructor: Reconstructs the multiview images into clinical-grade 3D mesh models.

- NVIDIA Omniverse Kaolin APP: Provides a platform for evaluating (e.g., Chamfer Distance, F-score), optimizing, and visualizing 3D models.

- Performance Improvements: The system demonstrates a 10% improvement in Chamfer Distance (tighter alignment of reconstructed 3D meshes to target geometry), a 15% increase in F-score (more accurate feature representation and enhanced structural integrity), and a 40% reduction in inference time, achieving clinical real-time usability.

- Goal: To autonomously generate three models based on records and written explanations, simplifying data conversion, enhancing the precision of simulations and preoperative preparations, and empowering healthcare providers to make more informed choices.

BIT Research Alliance’s Technological Advantages and Future Outlook

By integrating NVIDIA’s advanced hardware (GPUs) and software frameworks (NeMo, NeVA, Omniverse Kaolin, TensorRT), BIT Research Alliance has made significant progress in AI-driven 3D medical imaging and surgical modeling:

- Automation and Efficiency Improvement: Drastically reduces manual operations and improves the efficiency of image report generation and 3D modeling.

- Improved Precision and Consistency: AI models can generate more precise and consistent results, reducing human error.

- Facilitating Personalized Medicine: Provides strong technological support for personalized surgical planning and the design of custom medical devices.

- Revolutionizing Medical Education: Highly realistic 3D models offer medical students and young doctors more intuitive and safer learning and training tools.

In the future, we will continue to explore:

- Higher resolution and more realistic 3D model generation.

- Real-time interactive 3D surgical simulation.

- Integrating AI-generated 3D models with AR/VR technology for intraoperative navigation.

- Further expanding AI applications across different medical imaging modalities and clinical specialties.

Conclusion: AI Illuminates the 3D Vista, Empowering a New Future for Precision Medicine

From intelligent interpretation of 2D images to the automatic generation of high-precision 3D models, AI technology is profoundly changing how we observe, understand, and intervene with the human body. BIT Research Alliance is committed to being at the forefront of this transformation, leveraging the power of AI to bring clearer vision, more precise tools, and a better health future to clinicians and patients. We believe that a new era of visualized, personalized medicine driven by AI is within reach.